@hpy had asked me about a quick review of potential technologies that could be used to incorporate depth sensing capabilities into camera traps. The idea is that if camera trappers can have decent depth information from their cameras they can automatically do a lot more stuff with high precision (like estimate the size of the animals passing by with greater accuracy).

I figured I might as well cross post this quick little list I made in case it inspires anyone, or if anyone has other ideas to toss into this arena!

Reminder also that there’s lots of fun ideas for new camera traps out there, but a huge difficulty always seems to be making good cases that can deal with lots of abuse from people, transportation, weather, and animals.

Here’s a quick and dirty list of technologies and possible ideas I talked about with my friends @juul and Matt Flagg:

Active:

TOF arrays (e.g. this 8x8 array from sparkfun SparkFun Qwiic ToF Imager - VL53L5CX - SEN-18642 - SparkFun Electronics )

- Autonomous Low-power mode with interrupt programmable threshold to wake up the host

- Up to 400 cm ranging

- 60 Hz frame rate capability

- Emitter: 940 nm invisible light vertical cavity surface emitting laser (VCSEL) and integrated analog driver

IR pattern projection (e.g. Kinect, Realsense)

- Limits - some have difficulty in direct sunlight

calibrated Laser Speckle projection

- Could flash really bright laser speckle and photograph it

- could be visible in daylight, or have filters for specific channels

- could be very sensitive to vibration if the laser shifts and decalibrates

Structural light projection

- limits- very slow, can’t really work for moving things

LIDAR scanners

- limits - VERY expensive (like 600$+)

AI Prediction Based

single view depth prediction (e.g. https://www.cs.cornell.edu/projects/megadepth/)

Results are simply an inference of machine learning, not actual depth sensing. Would require lots of calibrated training.

Passive:

Photogrammetry

Personally, the passive methods of depth estimation make me the most excited, since just using 2-D camera images doesn’t add much new hardware into the mix, and helps future-proof designs, since photogrammatic techniques (like https://colmap.github.io/) can improve and still use old 2D images

Pre-calibrated Stereo Depth

- passive stereo depth (no active illumination), accuracy requires adequate lighting and the texture of objects/scenes. The typical accuracy is approximately 3% of distance but varies depending on the object/actual distance.

- Accuracy drops as the distance increases.

Off the shelf kits

OPENCV AI Kit lite -stereo grayscale cameras +

Min depth perception: ~18 cm (using extended disparity search stereo mode)

Max depth perception: ~18 m

Multi-camera arrays

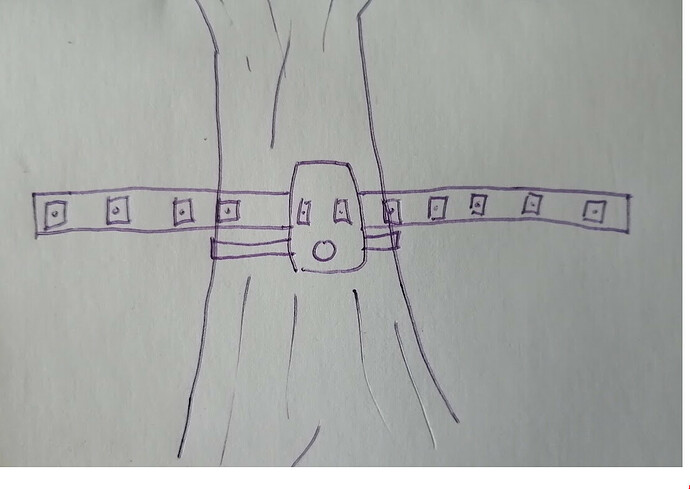

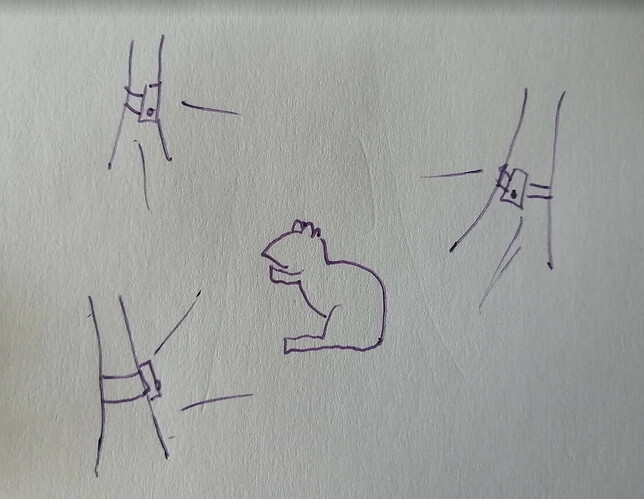

(This is my favorite idea, so i even drew some pictures)

-

There are SUPER cheap ESP32 cameras available for like $6-$20 USD

Like this one for $14 which has a display we wouldn’t need https://www.tindie.com/products/lilygo/lilygo-ttgo-t-camera/ or this one for $20 that even has a case and nicer specs https://www.tindie.com/products/lilygo/lilygo-ttgo-t-camera-mini/ -

You can put these ESP32 boards into “hibernation mode” which requires just like 3-5 microamps to stay on (meaning they could last months)

-

Get 5-10 of these cameras that you could set up as an array (this could cost about the same as a single off-the shelf camera trap

-

The array could be all connected to a single unit that is connected to a tree with telescoping arms

-

or several cameras could be independently connected around an area the animal might go through

-

then the cameras could be woken from hibernation by simple PIR motion detectors, grab images, and transfer them to a central node camera

-

finally the array of photos could be processed through something like COLMAP to get 3D reconstruction of each shot

-

a person may need to walk through the target area after setting up the cameras with something like a chessboard for calibration to make the 3D reconstruction easier

Other camera modules are also available if you want to have fancier optics that the 2MP