All right, long overdue update… I’ll also link to this post in this camera trap thread.

Camera trap autopsy pics

Camera trap autopsy pics

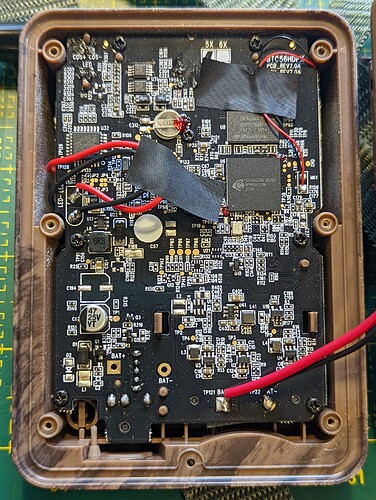

Thanks to @hikinghack who recently conducted an autopsy on one of the camera traps I brought to the camera trap unconference session during our Panama Gathering. It’s the Browning Strike Force HD Pro X (BTC-5HDPX). The goal of the autopsy was to open the camera trap and obtain high resolution images of its PCB board, to see if its trigger circuitry could be tapped into similar to how @Harold did it as described earlier in this thread.

The full set of autopsy photos is in this Flickr album shared under CC BY-SA 4.0. Here is a relevant photo from the album:

@Harold et al.: Any idea if this board could be tapped into??? What’s your assessment?

Unconference documentation from Panama

Unconference documentation from Panama

Josh generously took notes during our unconference session in Panama. For safekeeping, I made a back up of those notes here:

txti - Fast web pages for everybody (Internet Archive snapshot) (BTW, txti.es is a neat website that let’s you quickly whip up Markdown-based web pages!)

Notable pieces of hardware

Notable pieces of hardware

See above for this post on some related work on stereo camera traps. That said, I think @Harold’s approach of linking two existing camera traps together is more practical for field biologists who don’t always have the technical chops to build a camera trap from scratch.

With that in mind, here are a few more pieces of relevant hardware:

- @denisedd’s near-sighted camera traps and @hikinghack’s cool hack to fix it. Now @denisedd could use those camera traps to clearly identify the species of butterfly and track individuals through their markings!

- @jmwright’s posts immediately above this one on LoRa tech and stereo imaging cameras.

- The T-Camera S3 – An ESP32-S3 board with camera, display, PIR motion sensor, and microphone. Shared by @Albercook.

- An “Open source USB C camera with C mount lens, MIPI Sensor, Lattice FPGA, USB 3.0” discussed in this Hacker News thread (project repository).

- EsPiFF: An ESP32 in the Raspberry Pi form factor (also discussed in Hacker News).

- Bee Motion S3 on Crowd Supply - an “open source ESP32-S3 PIR motion sensing board”.

Relevant scientific papers

Relevant scientific papers

I’m reposting below a few links I shared with @Albercook to a few scientific papers that justify the need for a stereo camera trap to obtain spatial data in images to estimate wildlife populations.

Point transect method:

https://besjournals.onlinelibrary.wiley.com/doi/10.1111/2041-210X.12790

The other one I mentioned is the Random Encounter Model which, AFAIU, requires angle data. Here’s the classic paper on the topic:

https://doi.org/10.1111/j.1365-2664.2008.01473.x

If you look at the papers that cite it, you can see more recent developments with the model.

It is inspired by collision rates of molecules in gas models, such as discussed here:

https://doi.org/10.1111/j.1469-185X.2007.00014.x

And in “Assessing the camera trap methodologies used to estimate density of unmarked populations” they give a pretty good review of contemporary methods: