Hi all, been having conversations over the last few weeks and getting psyched about the Hackathon. We have a real opportunity to get proof of concept on several things:

- Connecting Public Lab’s image sequencer to the Our Sci platform, so Our Sci users can do image analysis + colorimetry on the fly online or offline.

- Also, using dropbot and/or opendrop to measure colormetric lab methods (water quality like nitrates / total N, phosphorous, food quality like antioxdants / polyphenols, etc etc.) with large numbers of samples, and integrating colormetric analysis and usingn Our Sci to collect / manage the data.

- Finally, connecting Our Sci to Public Lab’s Community Microscope and (hopefully) Open Flexure and Alex’s dropbot spectrometer as well. to see the resolution quality we can achieve, which will then determine the types of colormetric analysis we can do (easy ones with big color changes, or hard ones will small color changes).

For reference, Dropbot drops are about 2.5mm x 2.5mm, 0.2mm thick (though could be as big as 0.4m thick).

To do colorimetry on the dropbot and/or opendrop, we have 4 available options for colorimetry:

-

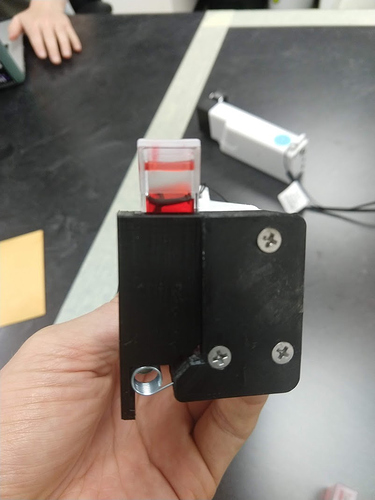

reflectometer (1) - Image below is the Our Sci reflectometer, but any could do… we use this now in the lab for colorimetry, with 5ml or 0.7ml cuvettes. Could use fibers to direct light out and back in a more controlled manner.

.

-

reflectometer (2) - Alex from U of T has developed a reflectometer around the dropbot with a focus on achieving high enough resolution for actual applications. He’s got a longer path through the very very narrow drop, which should help. This is the most likely to achieve the resolution we’ll need.

-

microscope - Public Lab’s Community Microscope, or just a raspberry pi camera zoomed in. This would give us larger image to average over more pixels. In this case, we’d probably want to use image analysis library like PL’s Image Sequencer in order to do the color comparison.

-

cell phone - Maybe a cell phone can zoom in far enough to be useful… that way, we could import the image into Our Sci’s data collection app, apply Image Sequencer or similar image analysis, and see what we get.

The problem is not that we can’t see color with these options… it’s that we probably can’t get enough resolution. Here’s some things we can try to improve resolution…

- averaging - claaaaasic solution to all signal quality questions… true for all above options, but especially images.

- Identify change over time - because we can take many images or measurements, we can actually track color change over time as the reaction goes to completion. If we know what the reaction curve should look like, it’s possible to calculate the asymptote based on the curve even if it’s noisy. This may help.

- differential signal - not sure exactly, but when measuring traditional signal analysis (like a voltage) a good way to increase gain is by comparing one signal against another, effectively subtracting a baseline, and then increasing the gain of the differential… not sure if one can do this with images, but it’s an idea. Also, Image Sequencer compares two squares which is similar to differential signal analysis… so this is definitely something we can do.

- environmental conditions play with the light environment or other factors around the sample… can we improve signal that way? Not sure here

- make droplets thicker - we can go from 0.2mm to 0.4mm thickness, which doubles our signal…

- … ???

So… in terms of ideas on what to do at the Hackathon, we don’t need to start with actual tests, first we really just need to characterize the signal quality on each instrument/option, and compare that to the requirements of a given test (like nitrates, phosphorous in water, etc.)… that way we can use food coloring in water rather than paying 10 bucks every time we want to test something out

- Test the resolution and repeatability of each of the above 4 options using food coloring and standard dilutions. How do they compare? Are any ‘good enough’ for actual applications?

- Optimize based on increasing averaging, change over time, etc etc. mentioned above. Create an ‘optimized’ method for each.

- Try to run an actual, real world sample like water quality or something… can we do it???

So, lots of work here… I’m going to spit out some things we may want to get together before we start…

@Bronwen - can you bring a wide range of microscopes and rasberry pi’s? Also… if you have colormetric tests that you like, bring some so we can test our optimized ‘final product’. Finally, can you connect with your dev team (@jeff, Vibhor and Divy) and make sure you’ve some things that’s close to ready to go?

@nexus - Manuel let’s make sure we can get image input into the system by the time the conference rolls around (sensor.js visualization feature), also try to be available for tech support during the hackathon. Also, test out using Image Sequencer and see where the issues are, report back to Jeff/Vibhor/Divy to see if we can get connected + testing it.

@MattR If you have a openflexure scope, please bring it! May be able to try it out.

@ryanfobel We probably ideally would like 4 - 5 dropbots to play with, so we’re not bumping elbows as we test.

@bengtsjolen Can you get/bring an Open drop or several? It’d be great to try this out there as well!

@gbathree and of course for me, need to make sure to bring some reflectometers, also some 1mm fiber + fiber cutters…